Introduction

Neuromorphic Computing as the Next Evolutionary Step for Artificial Intelligence

Today we are living in a time of a great technological transformation, that affects all aspects of our lives. Scientific advances in recent years caused, that we have a much larger range of devices which are recording and storing data in much higher precision and at a much higher rate than ever before. We are all an integral part of this technological revolution. Every human being produces a huge amount of data just by a simple click on the internet. Today we have a unique opportunity to analyze this data for a better understanding of the world that is surrounding us.

One of the most effective tools for analyzing this data today is artificial intelligence. With the advent of the new millennium, A.I. was pulled out of the “ice age” when, for many years, its development was not experiencing any key milestones, mainly due to the lack of appropriate hardware. Over the last decade, A.I. is experiencing a huge boom, and terms such as “deep learning” are literally buzzwords of the current startup scene and technological progress. Unfortunately, today we hit the barrier again. The barrier in the form of constraints in terms of chip design or physics of quantum phenomena. Moore law — every odd year (18 months to be exact) we double the number of transistors on the chip — is no longer valid. In comparison with the amount of stored data and the demand for its analysis, progress is simply becoming insufficient.

Why do conventional computer architectures fail to meet demand? They simply can’t scale.

Currently, we are hitting the three main problems:

● energy consumption — nowadays, chips consume an increasing amount of power and it is still one of the biggest challenges for developers, especially when developing chips for mobile devices. Referring to Moore’s rule — we increase the number of transistors on the chip every year, but with an increasing number of transistors, we have a problem with the efficient use of this amount of transistors on the chip. Dark silicone — is an expression for a phenomenon or problem where at any point in time, only a certain part of the chip is used and the rest remains “in the dark”.

● production of heat — each year, the number of transistors on the chip increases as we reduce the size of the transistors, which opens up another problem — overheating. More and more transistors are being pushed into a smaller and smaller space, and the solution for heat dissipation becomes a major challenge, not only for the chips themselves and their design but also for data centers, as a large amount of energy is also consumed by cooling systems.

● memory bandwidth — memory bandwidth is the rate at which data is read or written into the semiconductor memory by the processor. It is usually expressed in bytes per second. The problem of the speed of reading and writing data is the most fundamental problem of current Von-Neumann architecture, which is now simply insufficient or better said, is not good enough.

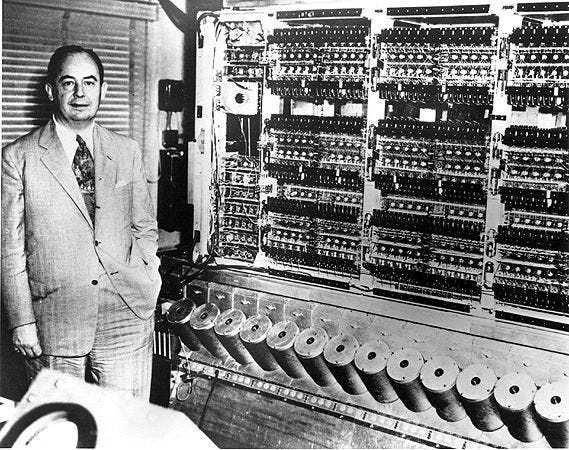

Not only chips but hardware, in general, have been developed for decades within the Von-Neumann architecture, whose basic feature is centralization. This architecture originates in the work of the physicist John von Neumann from the 40s of the 20th century. Its essence is data communication between the central processor and memory chips in the linear section of calculations.

Von-Neumann’s architecture has made an incredible contribution to the world of science and technology for decades, but due to its centralized architecture, it is currently facing serious problems in the context of scalability. The greater the need for communication with the memory, the higher is the memory bandwidth, the rate at which data is read or written from memory. Although we have been able to develop very efficient neural networks on this architecture, which allows us to analyze various complex data sets and find patterns in them, we have also encountered cognitive intelligence limits in this area.

At the beginning of the millennium, thanks to the renewed interest in A.I. and the advent of new algorithms for neural networks, we began to realize this growing problem and began to look for new ways to design the chip architecture to increase their efficiency. The inspiration is our own biological brain, and a new direction was born — Neuromorphic Computing.

Why the human brain is the best inspiration for a new direction in designing chip architecture, such as neuromorphic architecture? In short, the human brain is excellent in finding interesting patterns in a vast amount of noisy data. Another reason why the human brain is an excellent source of inspiration is its distributed memory and massive parallelism, making its architecture literally opposite to Von-Neumann architecture, which makes it an awesome tool for an upgrade of the current A.I. and neural networks.

Why is a biological brain the perfect inspiration for us?

● effective categorization

● the information is processed in parallel

● low energy consumption

● fault-tolerance

Conceptual categorization and representation problem

The greatest benefit of neural networks is the solution to the “representation problem” or conceptual categorization. Conceptual categorization requires the creation of a representation in the computer memory to which it is possible to map stimuli in the form of data inputs from the outside world. For example, “Clifford” would be mapped to “animal” and “dog”, while Volkswagen Beatle would be mapped to “machine” and “car.” Creating a robust and comprehensive mapping of data inputs from the outside world is very difficult because individual categories and their members can vary greatly in their characteristics — for example, a “human” can be male or female, old or young and tall or low. Even a simple object, such as a cube, will vary depending on the angle from which is observed. These conceptual categories are first and foremost constructs of the human mind. It is logical that we are inspired by how the human brain stores and works with these conceptual representations.

Neural networks store these representations in connections between neurons (called synapses), each containing a value called “weight.” Instead of programming, neural networks learn what weights to use through the training process. Neural networks have become the dominant methodologies for classifying tasks such as handwriting recognition, voice transcription, and object recognition. In the case of the human brain, each of the neurons works in parallel with others, decentralizes the information and distributes it through the neural groups. Because neurons work in a very simple way, they basically only receive and send signals, our brain is extremely energy efficient, consuming only 20W of energy.

Massive parallelism

Thanks to evolution, our brain has become an extremely powerful tool in finding interesting patterns and processing stimuli in the form of data inputs from our perceptual organs. Certainly, we cannot say that we may already know everything about the brain, but the essential thing we have taken into neuromorphic systems as inspiration is the massive parallelism of our biological brain.

Artificial neural networks are mathematical models that are a very simplified version of how neural networks work in the biological brain. However, today’s hardware is very ineffective in simulating neural network models. The reason for inefficiency is the fundamental difference between the functioning of the biological brain and today’s digital computer. While digital computers work with 0 and 1, the synaptic values (weights), that the brain uses to store information can move anywhere within the value range, i.e. the brain works on an analog principle. More importantly, in a computer, the number of signals that can be processed at one time is limited by the number of CPU cores — this can be between 8 and 12 cores on a typical today’s computer configurations or 1000–10,000 on a supercomputer. While 10,000 cores sound like many, it’s still a pitifully low number compared to the brain that simultaneously processes up to trillions (1,000,000,000,000) of signals in a massively parallel manner.

Energy efficiency

The two main differences between the biological brain and today’s computers (parallelism and analog processing) contribute to another difference, that has already been outlined above, and that is energy efficiency. Evolution has had a great impact on our brain in this own direction as well. Because in the case of our ancestors, finding nutritious food was very challenging, our brain, thanks to evolution, has adapted to such a level that at present, its energy consumption does not exceed 20W. For curiosity, if we wanted to simulate our brain with today’s computers, it would consume millions of watts of energy for simulation and we would need a large number of supercomputers to be able to do so. The main reason for higher power consumption are the higher frequencies, that today’s digital computers work with, as opposed to the brain that works at very low frequencies.

Fault tolerance

Another difference between neuromorphic chips and conventional computer hardware is that, like our brain, neuromorphic chips are fault-tolerant. If some component fails, the chip continues to function normally. Some neuromorphic chips are fault-tolerant up to 25%. This is a huge difference from today’s hardware, where a single failure means the failure of the entire chip, making it unusable. The need for precise and extremely difficult production of today’s chips and their constantly decreasing size, has led to exponentially higher production costs. Neuromorphic chips do not require as high precision as today’s chips, so their production would be cheaper.

From the progress in medicine to A.G.I.

Neuromorphic software as a challenge for future pioneers

Neuromorphic hardware has been freely accessible for a long time. But software remains the domain of research and development. The big challenge is the massive parallelism. Today we can easily write software that works in parallel on tens of processor cores, but writing software for tens of thousands to hundreds of thousands of cores working in a parallel way using the chip as effectively as possible is a huge challenge. To solve this problem, we must develop a literally new paradigm and new abstractions in the context of programming languages and programming in general.

Neuromorphic chips and their application today

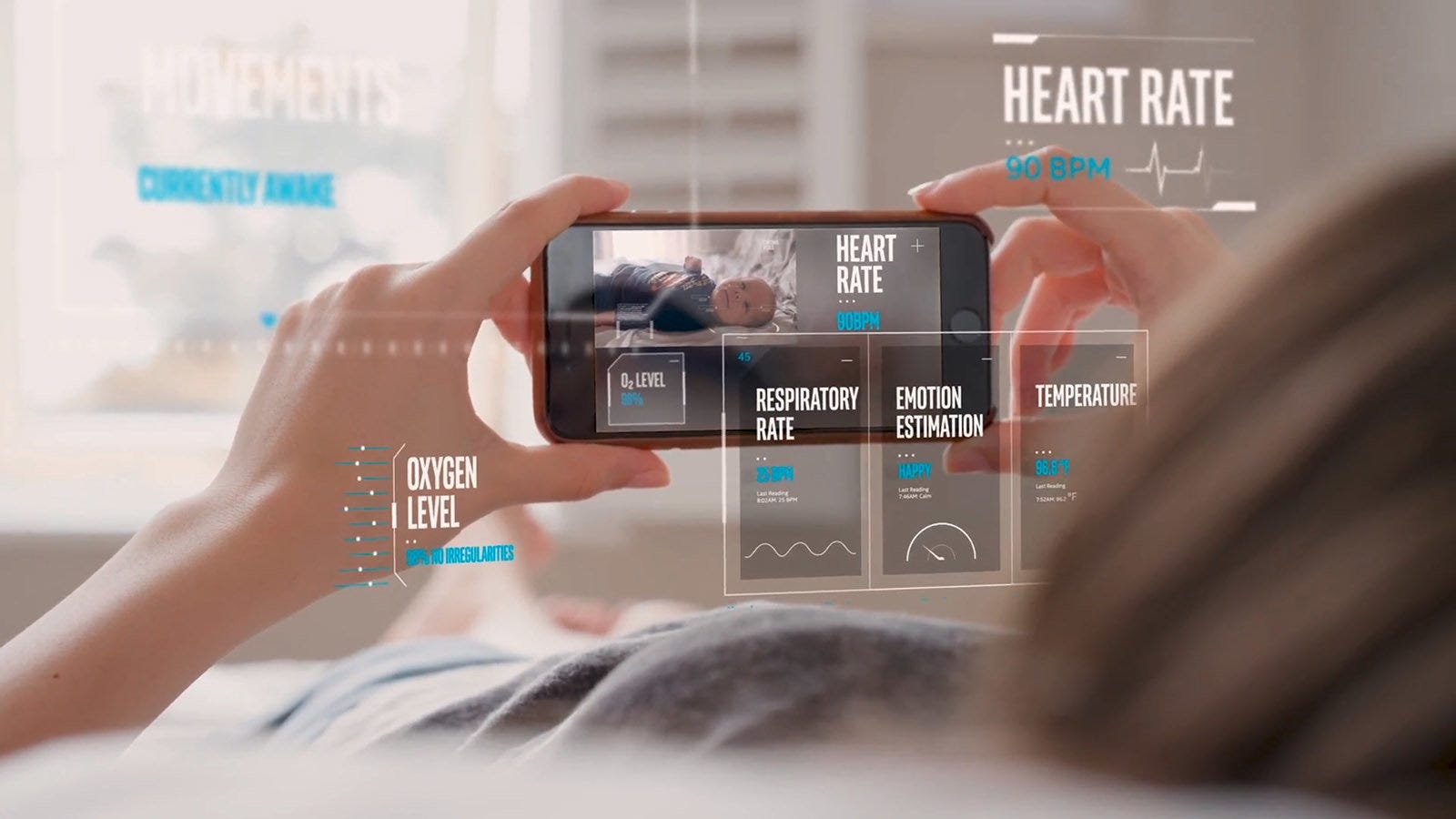

Today, neuromorphic chips are mostly used for simulation of brain activity. Today’s hardware, whether conventional digital or neuromorphic, does not allow us to simulate the whole brain, but only certain parts of it. In practice, this allows us to simulate, for example, the progress of brain disease. This allows us to understand how the disease develops over time and at the same time we can simulate its treatment. An example may be depression, where one of the physiological causes is that due to low levels of serotonin, neuromodulators cause impaired neuronal communication. This is something we can easily simulate using neuromorphic hardware. All of this allows us to better understand our brain and it contributes not only to the development of medicine but also to the development of neuromorphic architectures and hardware. One of the ideas of using neuromorphic hardware in medicine is also analyzing patient data to find similar patterns in the database of other patients, which will help us to better define the problem and choose a more effective treatment or even individualize the treatment.![]()

Instead of logic gates, it uses “spiking neurons” as a fundamental computing unit. Those can pass along signals of varying strength, much like the neurons in our own brains. They can also fire when needed, rather than being controlled by a clock like a regular processor. Source here.

What change will neuromorphic computing bring?

The advent of this new generation of computers could help neuroscientists fill the gaps in understanding of our brain. In addition, if neuromorphic chips get into widespread commercial use, neuromorphic computers could fundamentally change our interaction with the machines we today call smart. We can count on their use from smartphones and autonomous vehicles to the next generation of robots. This technology could integrate the cognitive abilities of the biological brain into devices and machines that are currently limited in speed, performance and by high energy consumption. In fact, this could be a further step towards creating cognitive systems and systems that are capable of learning from themselves, from their own memory for a better understanding of the surrounding environment and justifying their decisions as well as helping people make better decisions and thereby direct the path of A.I. to the creation of the first Artificial General Intelligence.

Neuromorphic Computing in Europe

Many experts in Europe and the world are working on the development of Neuromorphic systems, be it commercial development or academic research. To achieve the broad penetration of cognitive systems into ordinary people’s lives, active research and development is not enough. One of the most important steps is building a community not only of professionals and academics but also enthusiasts who will set the direction of demand and cognitive technology development. One of the organizations that has set itself the goal of connecting people and especially enthusiasts and community is called Neuromorphics Europe. The focus of this organization is the implementation of the latest advances in the field of Neuromorphic systems in life of every human being and solving the current problems in the fields from medicine and health care to transportation and smart cities. The common goal in collaboration with the community is to explore the industrial use-cases, new opportunities and sharing the experience with the world.

Conclusion

Conventional computers have been, are, and will continue to be a great help in precise calculations and mathematical understanding of the world. Today, however, neuromorphic architectures are a clear leader in the context of pattern recognition in vast amounts of noisy data. When we combine the use of traditional computers and neuromorphic architecture, the result can be a very interesting connection that can be the first, important step in the evolution of artificial intelligence.

Last updated